Infrastructure as Natural Language

When I first used an IaC (Infrastructure as Code) tool, I was amazed by how much better it was than clicking buttons in the AWS console. Declarative paradigm and cloud infrastructure are a very useful combination, and since then, I’ve rarely not used IaC, even for smaller projects.

Like any structured code, IaC has some pitfalls: the code takes effort and skill to write and can be verbose.

Consider this Terraform code:

resource "aws_security_group" "PythonExecEcsSecurityGroup" {

name = "python-exec-ecs-security-group"

description = "Security group for python exec ECS service"

vpc_id = aws_vpc.MainVpc.id

egress {

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

ingress {

from_port = var.container_port

to_port = var.container_port

protocol = "tcp"

security_groups = [aws_security_group.ServerEcsSecurityGroup.id]

}

}

Without losing information, we can describe this in English more naturally and with less space. Here is one possible English version:

Resource: AWS SecurityGroup

ID: "PythonExecEcsSecurityGroup"

In MainVpc

Name: "python-exec-ecs-security-group"

Description: "Security group for python exec ECS service"

Egress: allow all tcp traffic on port 443

Ingress: allow access on "tcp" protocol, ${container_port} port, and limited to ServerEcsSecurityGroup

Let’s compare the two versions side by side.

Terraform:

- Declarative: Describes the desired end state

- Actionable: Used by Terraform Core to execute the program

- Structured Syntax: Adheres to HCL syntax rules

- Specific Vocabulary: Requires specific keys and values

English:

- Declarative: Describes the desired end state

- Not Directly Actionable: Needs interpretation before it can be used by Terraform Core

- Flexible Syntax: No special syntax rules

- Open Vocabulary: No strict requirements on word choice, can be highly varied

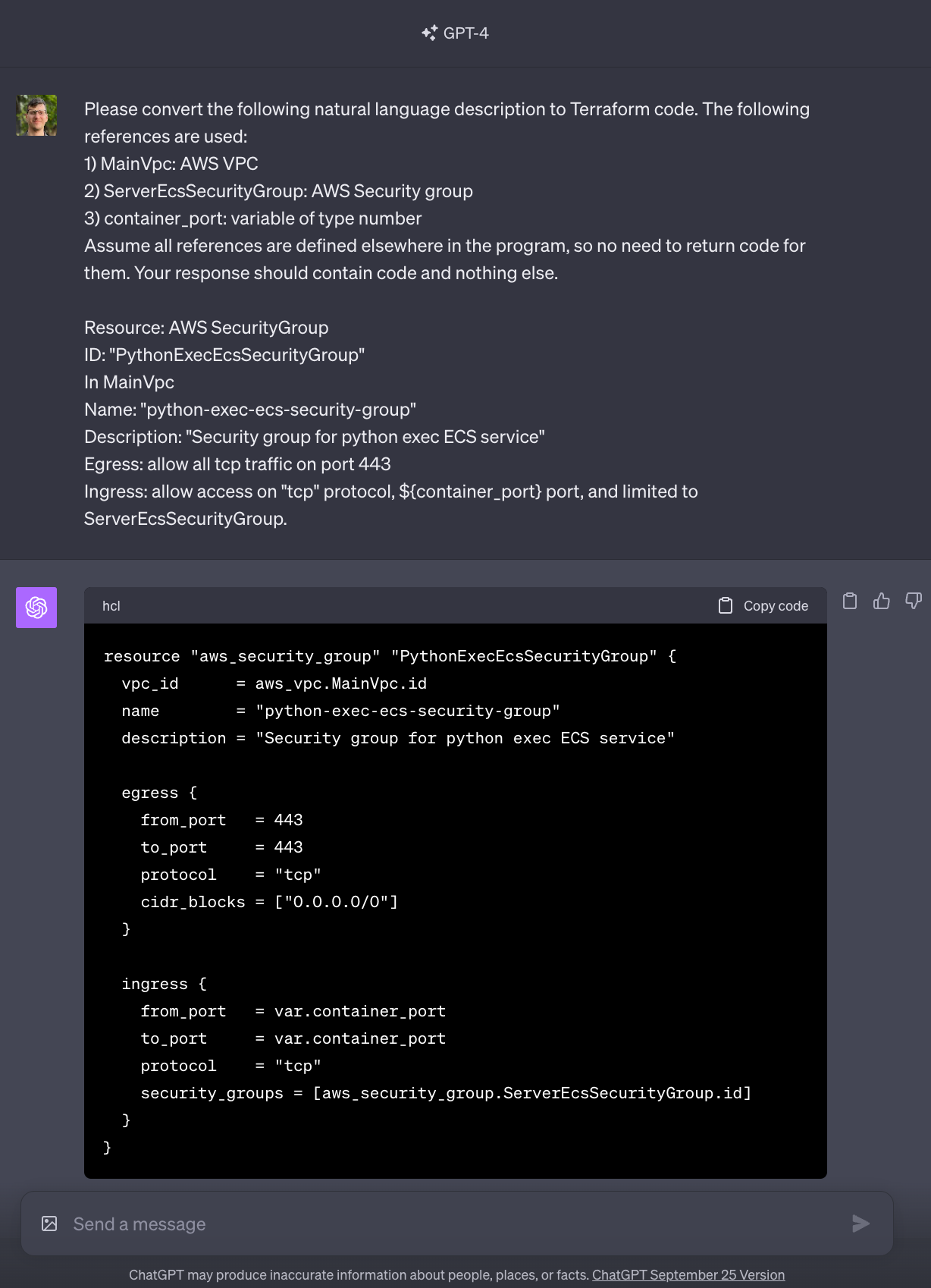

Traditionally, computers did not do well with interpreting freeform natural language. LLMs have unlocked that capability. Below is my exchange with ChatGPT. I’ve added some context and instructions to the prompt to make it easier for the model to understand what I want:

Using LLM as a probabilistic compilation stage, we’ve converted declarative natural language into declarative computer instructions. And, it turned out to be valid code that accurately captured my intentions1. This is significant, as this shows that English descriptions can be actionable.

Software engineers spend a lot more time reading code than writing it. It’s very useful to ask AI to write code on an ad-hoc basis, but the medium of my work is still code, even if I’m assisted by the LLM in reading and writing it. It’s useful to think of natural language as a “high level programming language”. When I use Python, I don’t just use it on an ad-hoc basis to generate a lower language representation. When I “program” using chat prompts and natural language, it would likewise be nice to work in natural language without having to switch back to structured code. Practically, why not combine IaC with natural language in a declarative way?

![]()

Introducing Salami

In late August I first thought about what such a tool would look like. Today, I am pleased to announce the release of the initial version of Salami. Salami is a declarative domain-specific language for cloud infrastructure based on natural language descriptions. Salami compiler uses GPT4 to convert the natural language to Terraform code. It is still experimental, but already works well for some use cases. For example, this non-trivial AWS application was written entirely in Salami!

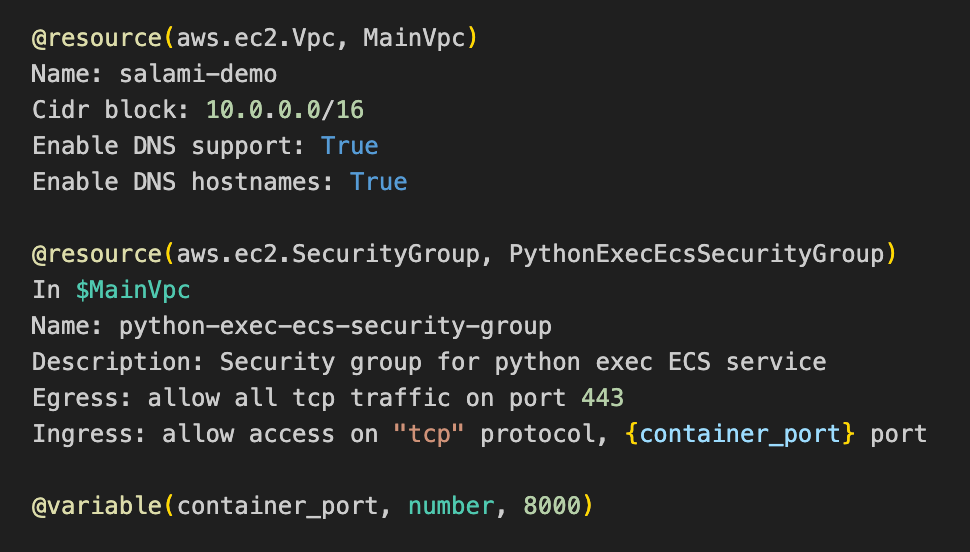

Here’s an example Salami code that declares a VPC resource, a Security Group resource and a container_port variable:

It’s worth noting that Salami produces better quality code than the raw GPT4 model. Initially, invalid code was present in about 20% of GPT4 generations. After implementing a validation step in the compiler, that dropped to 0 for my test examples. The validation step works by passing generated code through several rounds of terraform validate and sends errors back to LLM for correction. Similar methods have the potential to improve the model output even more.

To prevent unnecessary regenerations, Salami implements incremental compilation via a lock file, which stores the latest Salami descriptions and the resulting Terraform code. When salami compile is run, it determines exactly which objects changed since last compilation, and sends only those objects to the LLM. In addition, in the cases of an update, the prompts include both the old and the new versions of the object. Including the old version has effectively made GPT4 not introduce irrelevant changes in the update, but rather use the old version as the “baseline” which it adds the corrections to.

For an overview of the entire architecture, see the architecture doc.

Here’s a quick demo of Salami:

Salami makes it easier to program in natural language, without switching and copy-pasting. It’s also a more accurate tool than GPT4 alone, as it can catch errors and generate code that is more likely to be correct. Salami is a work in progress, and we are grateful for any feedback and contributions. Open an issue on GitHub or reach out to me directly if you have any questions or ideas.

Thanks to Nikita Gazarov, Adriaan Pelzer, Damon Anderson and Chris Lovato for contributing their invaluable ideas and feedback on this project. Special shoutout to Owusu Appiah for contributing code. Each one of you is awesome and I’m humbled and grateful for your support.

Footnotes

1 It’s worth noting that even state-of-the-art LLMs like GPT4 make mistakes quite often. I expect this to significantly improve with stronger models but a “compiler” that converts natural language to Terraform code will remain probabilistic rather than deterministic like traditional compilers.